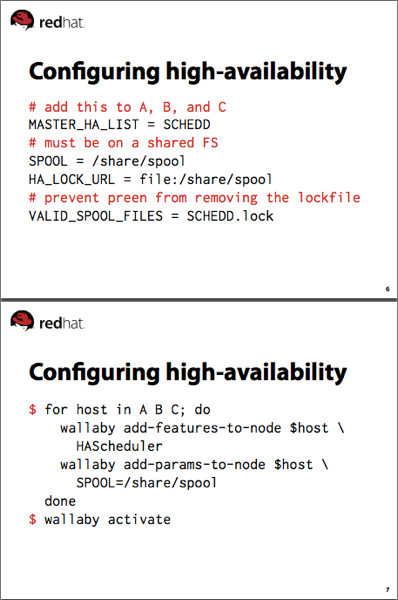

Rob Rati and I gave a tutorial on highly-available job queues at Condor Week this year. While it was not a Wallaby-specific tutorial, we did point out that configuring highly-available job queues is easier for users who manage and deploy their configurations with Wallaby; compare the manual and automated approaches in the following slides:

Configuring highly-available central managers (HA CMs) is rather more involved than configuring highly-available job queues. Here’s what a successful HA CM setup requires:

- all hosts that serve as candidate central managers (CMs) must be included in the

CONDOR_HOSTvariable across the pool - the

hadandreplicationdaemons must be set up to run on candidate CMs - the

HAD_LISTandREPLICATION_LISTconfiguration variables must include a list of candidate CMs and the ports on which thehadandreplicationdaemons are running on these hosts - various tunable settings related to shared-state and failure detection must be set

Wallaby includes HACentralManager, a ready-to-install feature that has sensible defaults for setting up a candidate CM. The tedious work of constructing lists of hostnames and ports – and ensuring that these are set everywhere that they must be – can take great advantage of Wallaby’s scriptability. At the bottom of this post is a simple Wallaby shell command that sets up a highly-available central manager with several nodes serving as candidate CMs. To use it, download it and place it in your WALLABY_COMMAND_DIR (review how to install Wallaby shell command extensions if necessary). Then invoke it with

wallaby setup-ha-cms fred barney wilma bettyThe above invocation will set up fred, barney, wilma, and betty as candidate CMs, place the candidate CMs in the PotentialCMs group (creating this group if necessary), and configure Wallaby’s default group to use the highly-available CM cluster. (The setup-ha-cms command takes options to put candidate CMs in a different group or apply this configuration to some subset of the pool; invoke it with --help for more information.)

Once you’ve set up your candidate CMs, be sure to activate the new configuration:

wallaby activateOf course, wallaby activate will alert you to any problems that prevent your configuration from taking effect. Correct any errors that come up and activate again, if necessary. The setup-ha-cms command is a pretty simple example of automating configuration, but it saves a lot of repetitive and error-prone effort!

UPDATE: The command will now remove all nodes from the candidate CM group before adding any nodes to it. This ensures that if the command is run multiple times with different candidate CM node sets, only the most recent set will receive the candidate CM configuration. (The command as initially posted would apply the candidate CM configuration to every node that was in the candidate CM group at invocation time, but only those nodes that were named in its most recent invocation would actually become candidate CMs.) Thanks to Rob Rati for the observation.

# cmd_setup_ha_cms.rb: sets up highly-available central managers

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

module Mrg

module Grid

module Config

module Shell

class SetupHaCms < ::Mrg::Grid::Config::Shell::Command

def self.opname

"setup-ha-cms"

end

def self.description

"sets up highly-available central managers"

end

def init_option_parser

OptionParser.new do |opts|

opts.banner = "Usage: wallaby #{self.class.opname} [OPTIONS] CM_HOST [...]\n#{self.class.description}"

opts.on("-h", "--help", "displays this message") do

puts @oparser

exit

end

opts.on("--cm-group GROUP", "Wallaby group for potential central managers" " (will be created if it doesn't exist; default is 'PotentialCMs')") do |grp|

@cm_group_name = grp

end

opts.on("--client-group GROUP", "Wallaby group for nodes that will use these HA CMs" " (will be created if it doesn't exist; defau;t is '+++DEFAULT')") do |grp|

@client_group_name = grp

end

end

end

def act

@cm_group_name ||= "PotentialCMs"

@client_group_name ||= "+++DEFAULT"

store.checkGroupValidity([@cm_group_name, @client_group_name]).each do |grp|

store.addExplicitGroup(grp)

end

cm_group = store.getGroupByName(@cm_group_name)

client_group = store.getGroupByName(@client_group_name)

cms = @wallaby_command_args

had_list = cms.map {|cm_name| "#{cm_name}:$(HAD_PORT)"}.join(", ")

replication_list = cms.map {|cm_name| "#{cm_name}:$(REPLICATION_PORT)"}.join(", ")

condor_host = cms.join(", ")

cm_group.modifyFeatures("ADD", ["HACentralManager"], {})

cm_group.modifyParams("ADD", {"REPLICATION_LIST"=>replication_list, "HAD_LIST"=>had_list})

client_group.modifyParams("ADD", {"CONDOR_HOST"=>condor_host})

cm_group.membership.each {|node| store.getNode(node).modifyMemberships("REMOVE", [@cm_group_name], {})}

cms.each do |cm|

store.getNode(cm).modifyMemberships("ADD", [@cm_group_name], {})

end

return 0

end

end

end

end

end

end